It’s a night in 1531, in town of Venice. In a printer’s workshop, an apprentice labors over the layout of a page that’s destined for an astronomy textbook—a dense line of type and a woodblock illustration of a cherubic head observing shapes moving through the cosmos, representing a lunar eclipse.

Like all features of book production within the sixteenth century, it’s a time-consuming process, but one that permits knowledge to spread with unprecedented speed.

Five hundred years later, the production of knowledge is a special beast entirely: terabytes of images, video, and text in torrents of digital data that flow into almost immediately and need to be analyzed nearly as quickly, allowing—and requiring—the training of machine-learning models to sort through the flow. This shift within the production of knowledge has implications for the long run of the whole lot from art creation to drug development.

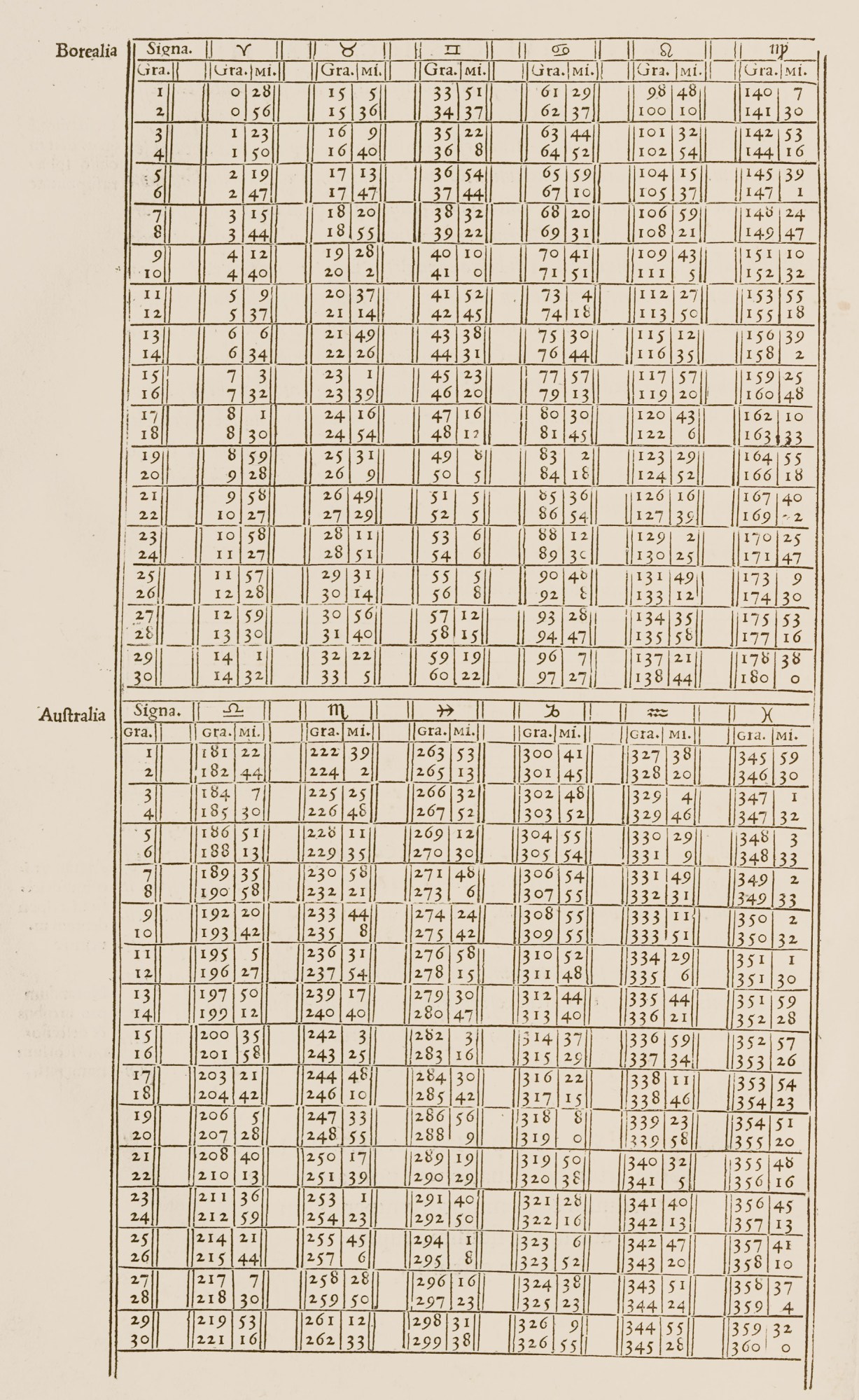

But those advances are also making it possible to look in a different way at data from the past. Historians have began using machine learning—deep neural networks specifically—to look at historical documents, including astronomical tables like those produced in Venice and other early modern cities, smudged by centuries spent in mildewed archives or distorted by the slip of a printer’s hand.

Historians say the appliance of recent computer science to the distant past helps draw connections across a broader swath of the historical record than would otherwise be possible, correcting distortions that come from analyzing history one document at a time. Nevertheless it introduces distortions of its own, including the danger that machine learning will slip bias or outright falsifications into the historical record. All this adds as much as a matter for historians and others who, it’s often argued, understand the current by examining history: With machines set to play a greater role in the long run, how much should we cede to them of the past?

Parsing complexity

Big data has come to the humanities throughinitiatives to digitize increasing numbers of historical documents, just like the Library of Congress’s collection of thousands and thousands of newspaper pages and the Finnish Archives’ court records dating back to the nineteenth century. For researchers, that is without delay an issue and a possibility: there may be far more information, and sometimes there was no existing strategy to sift through it.

That challenge has been met with the event of computational tools that help scholars parse complexity. In 2009, Johannes Preiser-Kapeller, a professor on the Austrian Academy of Sciences, was examining a registry of selections from the 14th-century Byzantine Church. Realizing that making sense of a whole lot of documents would require a scientific digital survey of bishops’ relationships, Preiser-Kapeller built a database of people and used network evaluation software to reconstruct their connections.

This reconstruction revealed hidden patterns of influence, leading Preiser-Kapeller to argue that the bishops who spoke probably the most in meetings weren’t probably the most influential; he’s since applied the technique to other networks, including the 14th-century Byzantian elite, uncovering ways wherein its social fabric was sustained through the hidden contributions of ladies. “We were in a position to discover, to a certain extent, what was occurring outside the official narrative,” he says.

Preiser-Kapeller’s work is but one example of this trend in scholarship. But until recently, machine learning has often been unable to attract conclusions from ever larger collections of text—not least because certain features of historical documents (in Preiser-Kapeller’s case, poorly handwritten Greek) made them indecipherable to machines. Now advances in deep learning have begun to handle these limitations, using networks that mimic the human brain to select patterns in large and sophisticated data sets.

Nearly 800 years ago, the Thirteenth-century astronomer Johannes de Sacrobosco published the an introductory treatise on the geocentric cosmos. That treatise became required reading for early modern university students. It was probably the most widely distributed textbook on geocentric cosmology, enduring even after the Copernican revolution upended the geocentric view of the cosmos within the sixteenth century.

The treatise can be the star player in a digitized collection of 359 astronomy textbooks published between 1472 and 1650—76,000 pages, including tens of 1000’s of scientific illustrations and astronomical tables. In that comprehensive data set, Matteo Valleriani, a professor with the Max Planck Institute for the History of Science, saw a possibility to trace the evolution of European knowledge toward a shared scientific worldview. But he realized that discerning the pattern required greater than human capabilities. So Valleriani and a team of researchers on the Berlin Institute for the Foundations of Learning and Data (BIFOLD) turned to machine learning.

This required dividing the gathering into three categories: text parts (sections of writing on a particular subject, with a transparent starting and end); scientific illustrations, which helped illuminate concepts equivalent to a lunar eclipse; and numerical tables, which were used to show mathematical features of astronomy.

All this adds as much as a matter for historians: With machines set to play a greater role in the long run, how much should we cede to them of the past?

On the outset, Valleriani says, the text defied algorithmic interpretation. For one thing, typefaces varied widely; early modern print shops developed unique ones for his or her books and sometimes had their very own metallurgic workshops to solid their letters. This meant that a model using natural-language processing (NLP) to read the text would must be retrained for every book.

The language also posed an issue. Many texts were written in regionally specific Latin dialects often unrecognizable to machines that haven’t been trained on historical languages. “It is a big limitation normally for natural-language processing, while you don’t have the vocabulary to coach within the background,” says Valleriani. This is a component of the explanation NLP works well for dominant languages like English but is less effective on, say, ancient Hebrew.

As an alternative, researchers manually extracted the text from the source materials and identified single links between sets of documents—as an example, when a text was imitated or translated in one other book. This data was placed in a graph, which mechanically embedded those single links in a network containing all of the records (researchers then used a graph to coach a machine-learning method that may suggest connections between texts). That left the visual elements of the texts: 20,000 illustrations and 10,000 tables, which researchers used neural networks to check.

Present tense

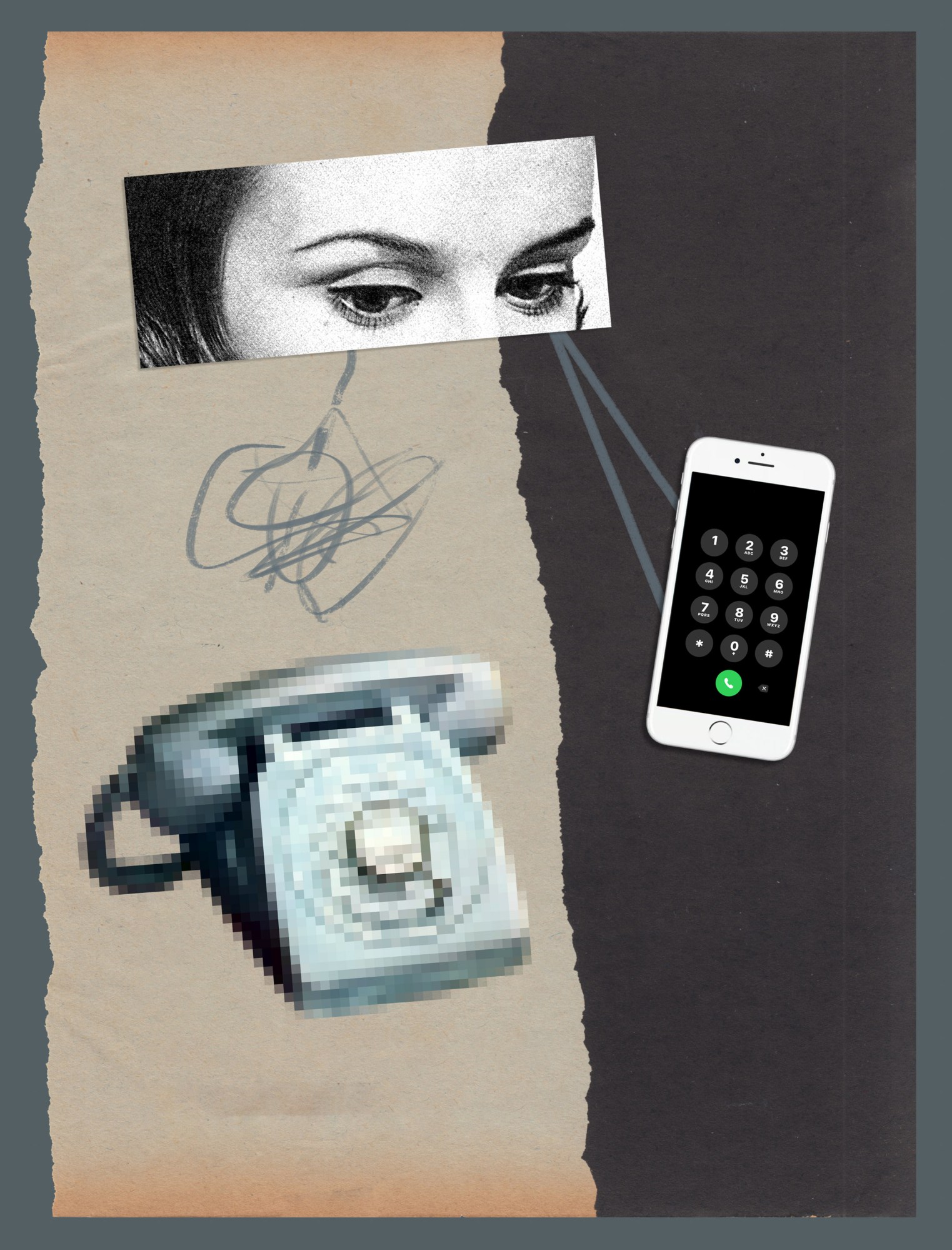

Computer vision for historical images faces similar challenges to NLP; it has what Lauren Tilton, an associate professor of digital humanities on the University of Richmond, calls a “present-ist” bias. Many AI models are trained on data sets from the last 15 years, says Tilton, and the objects they’ve learned to list and discover are likely to be features of up to date life, like cell phones or cars. Computers often recognize only contemporary iterations of objects which have an extended history—think iPhones and Teslas, reasonably than switchboards and Model Ts. To top it off, models are typically trained on high-resolution color images reasonably than the grainy black-and-white photographs of the past (or early modern depictions of the cosmos, inconsistent in appearance and degraded by the passage of time). This all makes computer vision less accurate when applied to historical images.

“We’ll seek advice from computer science folks, they usually’ll say, ‘Well, we solved object detection,’” she says. “And we’ll say, actually, for those who take a set of photos from the Thirties, you’re going to see it hasn’t quite been as solved as we predict.” Deep-learning models, which might discover patterns in large quantities of knowledge, will help because they’re able to greater abstraction.

Within the case of the Sphaera project, BIFOLD researchers trained a neural network to detect, classify, and cluster (in line with similarity) illustrations from early modern texts; that model is now accessible to other historians via a public web service called CorDeep. Additionally they took a novel approach to analyzing other data. For instance, various tables found throughout the a whole lot of books in the gathering couldn’t be compared visually because “the identical table will be printed 1,000 other ways,” Valleriani explains. So researchers developed a neural network architecture that detects and clusters similar tables on the premise of the numbers they contain, ignoring their layout.

To date, the project has yielded some surprising results. One pattern present in the info allowed researchers to see that while Europe was fracturing along religious lines after the Protestant Reformation, scientific knowledge was coalescing. The scientific texts being printed in places equivalent to the Protestant city of Wittenberg, which had develop into a middle for scholarly innovation because of the work of Reformed scholars, were being imitated in hubs like Paris and Venice before spreading across the continent. The Protestant Reformation isn’t exactly an understudied subject, Valleriani says, but a machine-mediated perspective allowed researchers to see something latest: “This was absolutely not clear before.” Models applied to the tables and pictures have began to return similar patterns.

Computers often recognize only contemporary iterations of objects which have an extended history—think iPhones and Teslas, reasonably than switchboards and Model Ts.

These tools offer possibilities more significant than simply keeping track of 10,000 tables, says Valleriani. As an alternative, they permit researchers to attract inferences in regards to the evolution of information from patterns in clusters of records even in the event that they’ve actually examined only a handful of documents. “By two tables, I can already make an enormous conclusion about 200 years,” he says.

Deep neural networks are also playing a job in examining even older history. Deciphering inscriptions (often called epigraphy) and restoring damaged examples are painstaking tasks, especially when inscribed objects have been moved or are missing contextual cues. Specialized historians must make educated guesses. To assist, Yannis Assael, a research scientist with DeepMind, and Thea Sommerschield, a postdoctoral fellow at Ca’ Foscari University of Venice, developed a neural network called Ithaca, which might reconstruct missing portions of inscriptions and attribute dates and locations to the texts. Researchers say the deep-learning approach—which involved training on an information set of greater than 78,000 inscriptions—is the primary to handle restoration and attribution jointly, through learning from large amounts of knowledge.

To date, Assael and Sommerschield say, the approach is shedding light on inscriptions of decrees from a very important period in classical Athens, which have long been attributed to 446 and 445 BCE—a date that some historians have disputed. As a test, researchers trained the model on an information set that didn’t contain the inscription in query, after which asked it to investigate the text of the decrees. This produced a special date. “Ithaca’s average predicted date for the decrees is 421 BCE, aligning with probably the most recent dating breakthroughs and showing how machine learning can contribute to debates around one of the crucial significant moments in Greek history,” they said by email.

Time machines

Other projects propose to make use of machine learning to attract even broader inferences in regards to the past. This was the motivation behind the Venice Time Machine, certainly one of several local “time machines” across Europe which have now been established to reconstruct local history from digitized records. The Venetian state archives cover 1,000 years of history spread across 80 kilometers of shelves; the researchers’ aim was to digitize these records, lots of which had never been examined by modern historians. They’d use deep-learning networks to extract information and, by tracing names that appear in the identical document across other documents, reconstruct the ties that when sure Venetians.

Frédéric Kaplan, president of the Time Machine Organization, says the project has now digitized enough of town’s administrative documents to capture the feel of town in centuries past, making it possible to go constructing by constructing and discover the families who lived there at different cut-off dates. “These are a whole lot of 1000’s of documents that must be digitized to achieve this kind of flexibility,” says Kaplan. “This has never been done before.”

Still, on the subject of the project’s ultimate promise—at least a digital simulation of medieval Venice all the way down to the neighborhood level, through networks reconstructed by artificial intelligence—historians like Johannes Preiser-Kapeller, the Austrian Academy of Sciences professor who ran the study of Byzantine bishops, say the project hasn’t been in a position to deliver since the model can’t understand which connections are meaningful.

Days of future past: Three key projects underway within the digital humanities

-

CorDeep

WHO: Max Planck Institute for the History of Science

WHAT: An online-based application for classifying content from historical documents that include numerical and alphanumerical tables. Software can locate, extract, and classify visual elements designated “content illustrations,” “initials,” “decorations,” and “printer’s marks.”

-

ITHACA

Who: DeepMind

What: A deep neural network trained to concurrently perform the tasks of textual restoration, geographic attribution, and chronological attribution, previously performed by epigraphers.

-

Venice Time Machine Project

Who: École Polytechnique Fédérale de Lausanne, Ca’ Foscari, and the State Archives of Venice

What: A digitized collection of the Venetian state archives, which cover 1,000 years of history. Once it’s accomplished, researchers will use deep learning to reconstruct historical social networks.

Preiser-Kapeller has done his own experiment using automatic detection to develop networks from documents—extracting network information with an algorithm, reasonably than having an authority extract information to feed into the network as in his work on the bishops—and says it produces a whole lot of “artificial complexity” but nothing that serves in historical interpretation. The algorithm was unable to tell apart instances where two people’s names appeared on the identical roll of taxpayers from cases where they were on a wedding certificate, in order Preiser-Kapeller says, “What you actually get has no explanatory value.” It’s a limitation historians have highlighted with machine learning, just like the purpose people have made about large language models like ChatGPT: because models ultimately don’t understand what they’re reading, they’ll arrive at absurd conclusions.

It’s true that with the sources which can be currently available, human interpretation is required to offer context, says Kaplan, though he thinks this might change once a sufficient variety of historical documents are made machine readable.

But he imagines an application of machine learning that’s more transformational—and potentially more problematic. Generative AI could possibly be used to make predictions that flesh out blank spots within the historical record—as an example, in regards to the variety of apprentices in a Venetian artisan’s workshop—based not on individual records, which could possibly be inaccurate or incomplete, but on aggregated data. This will likely bring more non-elite perspectives into the image but runs counter to straightforward historical practice, wherein conclusions are based on available evidence.

Still, a more immediate concern is posed by neural networks that create false records.

Is it real?

On YouTube, viewers can now watch Richard Nixon make a speech that had been written in case the 1969 moon landing resulted in disaster but fortunately never needed to be delivered. Researchers created the deepfake to point out how AI could affect our shared sense of history. In seconds, one can generate false images of major historical events just like the D-Day landings, as Northeastern history professor Dan Cohen discussed recently with students in a category dedicated to exploring the best way digital media and technology are shaping historical study. “[The photos are] entirely convincing,” he says. “You possibly can stick an entire bunch of individuals on a beach and with a tank and a machine gun, and it looks perfect.”

False history is nothing latest—Cohen points to the best way Joseph Stalin ordered enemies to be erased from history books, for example—but the size and speed with which fakes will be created is breathtaking, and the issue goes beyond images. Generative AI can create texts that read plausibly like a parliamentary speech from the Victorian era, as Cohen has done along with his students. By generating historical handwriting or typefaces, it could also create what looks convincingly like a written historical record.

Meanwhile, AI chatbots like Character.ai and Historical Figures Chat allow users to simulate interactions with historical figures. Historians have raised concerns about these chatbots, which can, for instance, make some individuals seem less racist and more remorseful than they really were.

In other words, there’s a risk that artificial intelligence, from historical chatbots to models that make predictions based on historical records, will get things very incorrect. A few of these mistakes are benign anachronisms: a question to Aristotle on the chatbot Character.ai about his views on women (whom he saw as inferior) returned a solution that they need to “haven’t any social media.” But others could possibly be more consequential—especially once they’re mixed into a set of documents too large for a historian to be checking individually, or in the event that they’re circulated by someone with an interest in a specific interpretation of history.

Even when there’s no deliberate deception, some scholars have concerns that historians may use tools they’re not trained to grasp. “I feel there’s great risk in it, because we as humanists or historians are effectively outsourcing evaluation to a different field, or perhaps a machine,” says Abraham Gibson, a history professor on the University of Texas at San Antonio. Gibson says until very recently, fellow historians he spoke to didn’t see the relevance of artificial intelligence to their work, but they’re increasingly waking as much as the chance that they might eventually yield among the interpretation of history to a black box.

This “black box” problem shouldn’t be unique to history: even developers of machine-learning systems sometimes struggle to grasp how they function. Fortunately, some methods designed with historians in mind are structured to offer greater transparency. Ithaca produces a spread of hypotheses ranked by probability, and BIFOLD researchers are working on the interpretation of their models with explainable AI, which is supposed to disclose which inputs contribute most to predictions. Historians say they themselves promote transparency by encouraging people to view machine learning with critical detachment: as a useful gizmo, but one which’s fallible, identical to people.

The historians of tomorrow

While skepticism toward such latest technology persists, the sphere is step by step embracing it, and Valleriani thinks that in time, the variety of historians who reject computational methods will dwindle. Scholars’ concerns in regards to the ethics of AI are less a reason not to make use of machine learning, he says, than a possibility for the humanities to contribute to its development.

Because the French historian Emmanuel Le Roy Ladurie wrote in 1968, in response to the work of historians who had began experimenting with computational history to research questions equivalent to voting patterns of the British parliament within the 1840s, “the historian of tomorrow can be a programmer, or he is not going to exist.”